Nvidia debuts new products for robotics developers, including Jetson Orin Nano • TechCrunch

[ad_1]

Amid the festivities at its fall 2022 GTC conference, Nvidia took the wraps off new robotics-related hardware and services aimed at companies developing and testing machines across industries like manufacturing. Isaac Sim, Nvidia’s robotics simulation platform, will soon be available in the cloud, the company said. And Nvidia’s Jetson lineup of system-on-modules is expanding with Jetson Orin Nano, a system designed for low-powered robots.

Isaac Sim, which launched in open beta last June, allows designers to simulate robots interacting with mockups of the real world (think digital re-creations of warehouses and factory floors). Users can generate data sets from simulated sensors to train the models on real-world robots, leveraging synthetic data from batches of parallel, unique simulations to improve the model’s performance.

It’s not just marketing bluster, necessarily. Some research suggests that synthetic data has the potential to address many of the development challenges plaguing companies attempting to operationalize AI. MIT researchers recently found a way to classify images using synthetic data, and nearly every major autonomous vehicle company uses simulation data to supplement the real-world data they collect from cars on the road.

Nvidia says that the upcoming release of Isaac Sim — which is available on AWS RoboMaker and Nvidia NGC, from which it can be deployed to any public cloud, and soon on Nvidia’s Omniverse Cloud platform — will include the company’s real-time fleet task assignment and route-planning engine, Nvidia cuOpt, for optimizing robot path planning.

“With Isaac Sim in the cloud … teams can be located across the globe while sharing a virtual world in which to simulate and train robots,” Nvidia senior product marketing manager Gerard Andrews wrote in a blog post. “Running Isaac Sim in the cloud means that developers will no longer be tied to a powerful workstation to run simulations. Any device will be able to set up, manage and review the results of simulations.”

Jetson Orin Nano

Back in March, Nvidia introduced Jetson Orin, the next generation of the company’s Arm-based, single-board PCs for edge computing use cases. The first in the line was the Jetson AGX Orin, and Orin Nano expands the portfolio with more affordable configurations.

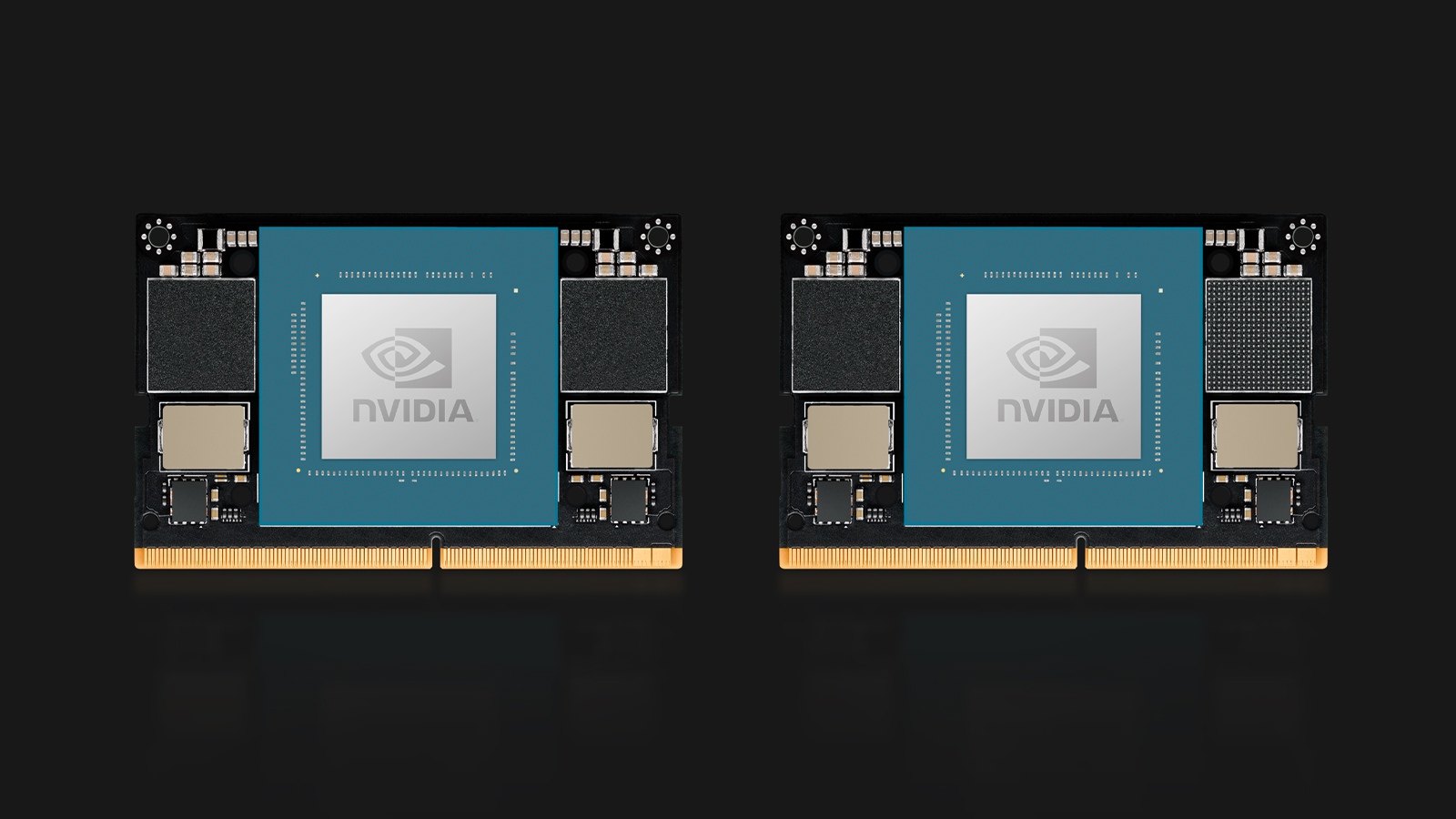

Image Credits: Nvidia

The aforementioned Orin Nano delivers up to 40 trillion operations per second (TOPS) — the number of computing operations the chip can handle at 100% utilization — in the smallest Jetson form factor to date. It sits on the entry-level side of the Jetson family, which now includes six Orin-based production modules intended for a range of robotics and local, offline computing applications.

Coming in modules compatible with Nvidia’s previously announced Orin NX, the Orin Nano supports AI application pipelines with Ampere architecture GPU — Ampere being the GPU architecture that Nvidia launched in 2020. Two versions will be available in January starting at $199: The Orin Nano 8GB, which delivers up to 40 TOPS with power configurable from 7W to 15W, and the Orin Nano 4GB, which reaches up to 20 TOPS with power options as low as 5W to 10W.

“Over 1,000 customers and 150 partners have embraced Jetson AGX Orin since Nvidia announced its availability just six months ago, and Orin Nano will significantly expand this adoption,” Nvidia VP of embedded and edge computing Deepu Talla said in a statement. (By comparison to the Orin Nano, the Jetson AGX Orin costs well over a thousand dollars — needless to say, a substantial delta.) “With an orders-of-magnitude increase in performance for millions of edge AI and [robotics] developers Jetson Orin Nano sets new standard for entry-level edge AI and robotics.”

[ad_2]